v1.34.1: Model Context Protocol support

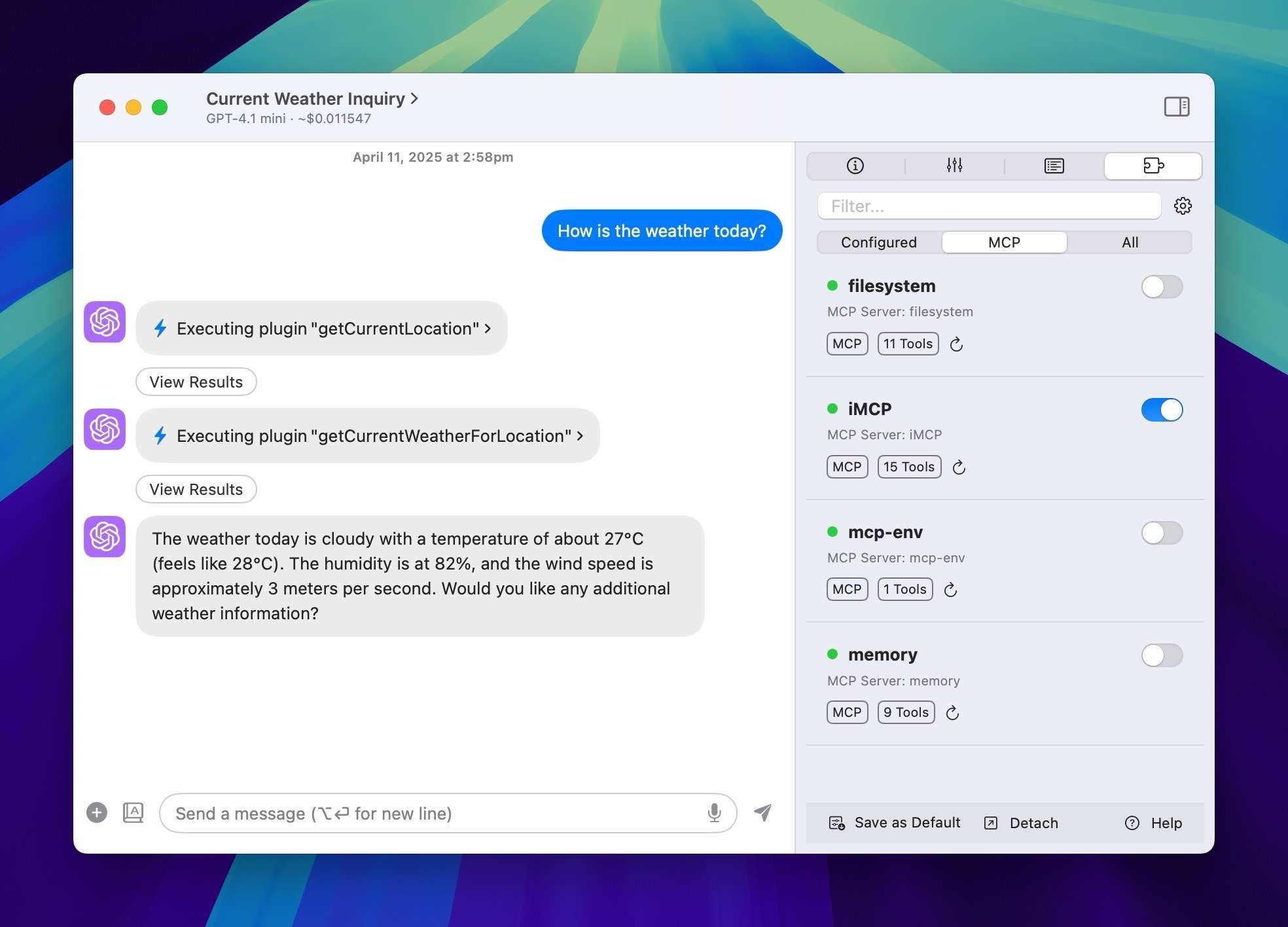

- New: BoltAI is now an MCP client. MCP servers allows you to extend BoltAI's capabilities with custom tools and commands

- New: Added support for GitHub Copilot and Jina DeepSearch

- New: Added OpenAI's new models: o3, o4-mini, GPT-4.1, GPT-4.1 mini, GPT-4.1 nano

- New: Support more UI customization options: App Sidebar & Chat Input UI

- Improved: Automatically pull model list from Google AI

What's new? Model Context Protocol The most significant change of this release is MCP support. An MCP server, short for Model Context Protocol server, is a lightweight program that exposes specific capabilities to AI models via a standardized protocol, enabling AI to interact with external data sources and tools. MCP servers allows you to extend BoltAI's capabilities with custom tools and commands. To learn more about using MCP servers in BoltAI, read more at https://boltai.com/docs/plugins/mcp-servers New AI Providers BoltAI now supports 2 new AI service providers: GitHub Copilot* and Jina DeepSearch *Note: an active Copilot subscription is required OpenAI's new models This release added support for latest models: o3, o4-mini and GPT-4.1 model family. Note that to stream o3 model, you will need to verify your org. Alternatively, you can disable streaming for o3 in Settings > Advanced > Feature Flags More customizations Go to Settings > Appearance to personalize your app. You can now customize the App Sidebar and Chat Input Box. Enjoy. See you in the next update 👋