How to use local Whisper instance in BoltAI

The Whisper model is a speech to text model from OpenAI that you can use to transcribe audio files. In BoltAI, you can use the Whisper model via OpenAI API, Groq or a custom server.

Follow this step-by-step guide to setup and use a local Whisper instance in BoltAI

0. Prerequisites

Make sure your local Whisper instance is up and running. This is outside the scope of this guide. Here are some pointers for you:

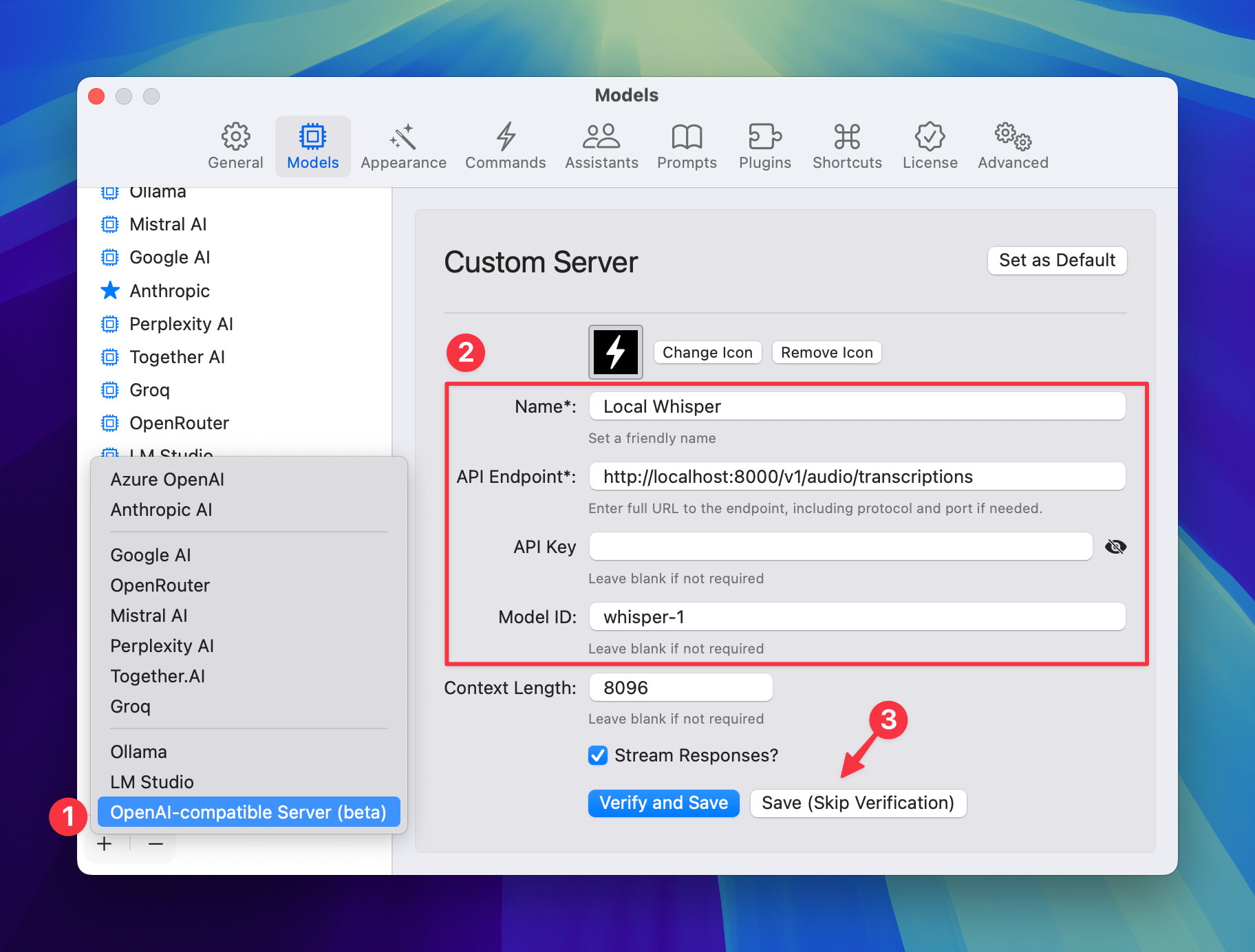

1. Add a custom service in BoltAI

- Go to Settings > Models. Click the plus (+) button and select "OpenAI-compatible Server"

- Fill the form, make sure to enter the full URL to the API endpoint

- Click "Save (Skip Verification)"

Setup a custom service in BoltAI

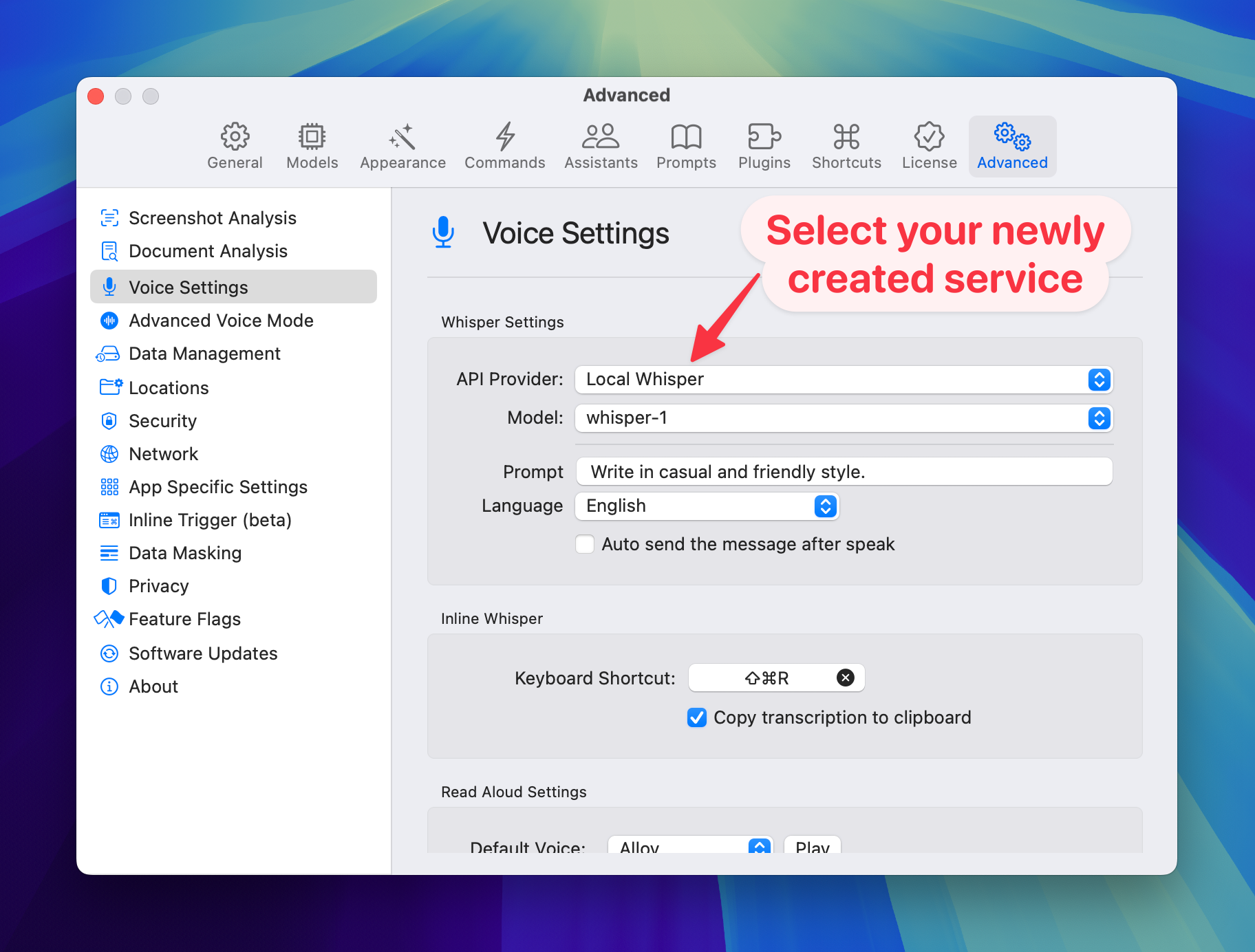

2. Configure BoltAI to use this custom service

- Go to Settings > Advanced > Voice Settings

- In the "Whisper Settings" section, select your newly created service ("Local Whisper" in this example)

Now both the main chat UI and the Inline Whisper will use this local whisper instance instead of OpenAI.

Configure BoltAI to use the local Whisper instance

That's it

It's pretty simple, isn't it? If you have any question, feel free to send me an email. I'm happy to help.

If you are new here, BoltAI is a native macOS app that allows you to access ChatGPT inside any app. Download now.