Optimize Ollama Models for BoltAI

BoltAI supports both online and local models. If privacy is your primary concern, you can use BoltAI to interact with your local AI models. We recommend using Ollama.

By default, Ollama uses a context window size of 2048 tokens. This is not ideal for more complex tasks such as document analysis or heavy tool uses.

Follow this step-by-step guide to modify the context window size in Ollama.

1. Prepare the Modelfile for the new model

In Ollama, a Modelfile serves as a configuration blueprint for creating and sharing models. To modify the context window size for a model on Ollama, we will need to build a new model with the new num_ctx configuration.

Create a new file Modelfile with this content:

FROM <your model>

PARAMETER num_ctx <context size>Here is my Modelfile to build qwen2 128K context window:

FROM qwen2.5-coder:7b

PARAMETER num_ctx 320002. Create a new model based on the modified Modelfile

Run this command to create the new model

ollama create <model name> -f ./ModelfileIf you've already pulled the model, it should be very fast. You can verify it by running ollama list. You can see the new model qwen2.5-coder-32k

> ollama list

NAME ID SIZE MODIFIED

qwen2.5-coder-32k:latest b8989a4336cf 4.7 GB 12 seconds ago

qwen2.5-coder:7b 2b0496514337 4.7 GB 12 seconds ago

qwen2.5-coder:latest 2b0496514337 4.7 GB About a minute ago

llama3.2-vision:latest 38107a0cd119 7.9 GB 5 days ago

llama3.2:3b a80c4f17acd5 2.0 GB 6 weeks ago

llama3.2:1b baf6a787fdff 1.3 GB 6 weeks ago

llama3.1:latest a340353013fd 4.7 GB 3 months ago

nomic-embed-text:latest 0a109f422b47 274 MB 6 months ago

llava:latest 8dd30f6b0cb1 4.7 GB 6 months ago

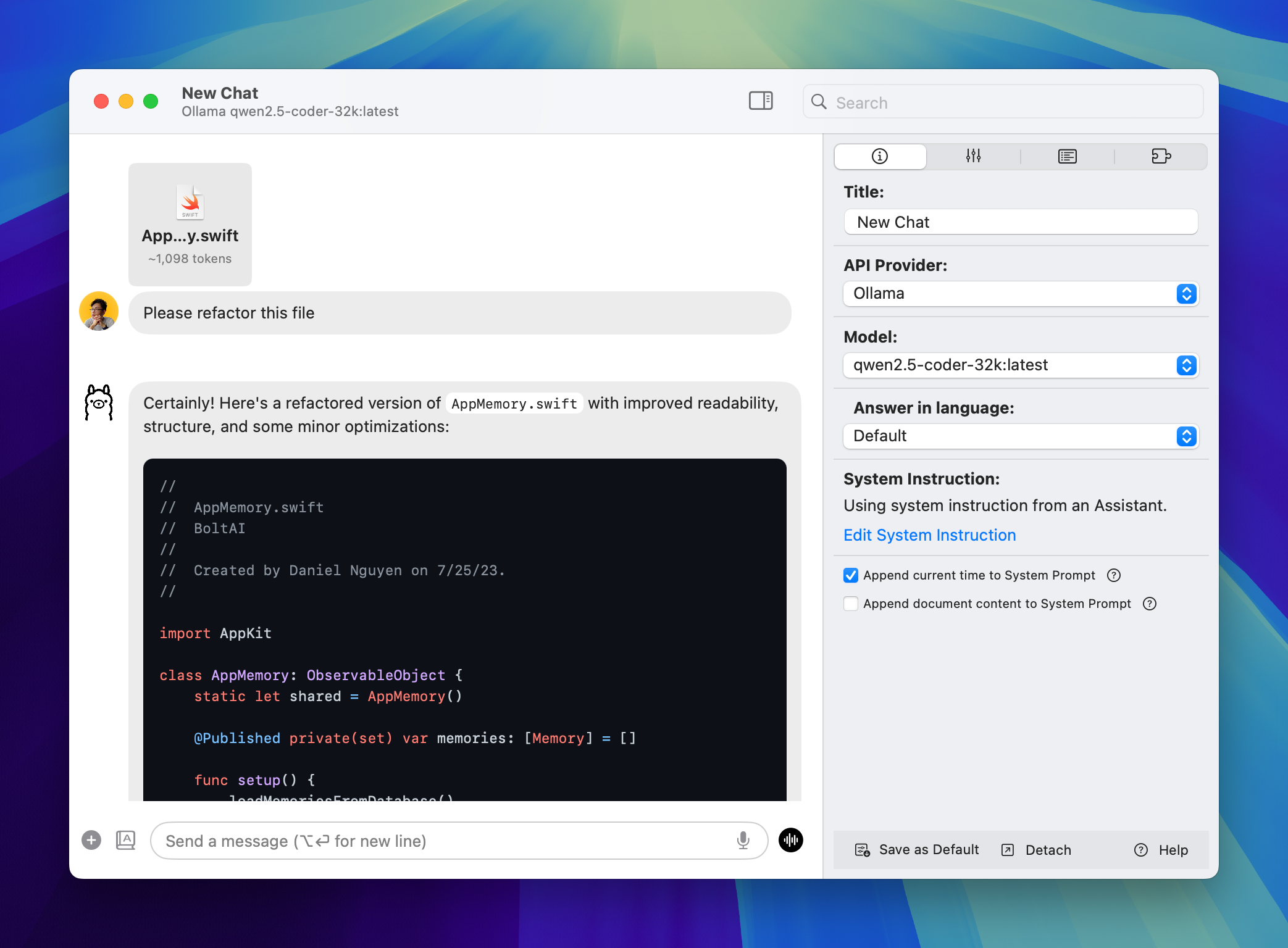

3. Try the new model in BoltAI

Go back to BoltAI and refresh the model list.

- Open BoltAI Settings (

command + ,) - Navigate to Models > Ollama

- Click "Refresh"

Start a new chat with this model:

Working with the new model in BoltAI

Setting the context window parameter at runtime

There are 2 sets of API in Ollama:

Ollama allows setting the num_ctx parameter when using the Ollama API endpoint. Unfortunately, this is not possible when using the OpenAI-compatible API, which BoltAI is using.

In the next version, I will add support for the official Ollama API endpoint but for now, please create a new model with the modified num_ctx parameter.

And that's it for now 👋

If you are new here, BoltAI is a mac app that allows you to use top AI services and local models easily, all from a single native Mac app.

If you are new here, BoltAI is a native macOS app that allows you to access ChatGPT inside any app. Download now.