v1.13.0 (build 34)

This release improves the chat-with-screenshot feature and supports more AI service providers, including Local LLM Inference Server.

TL;DR

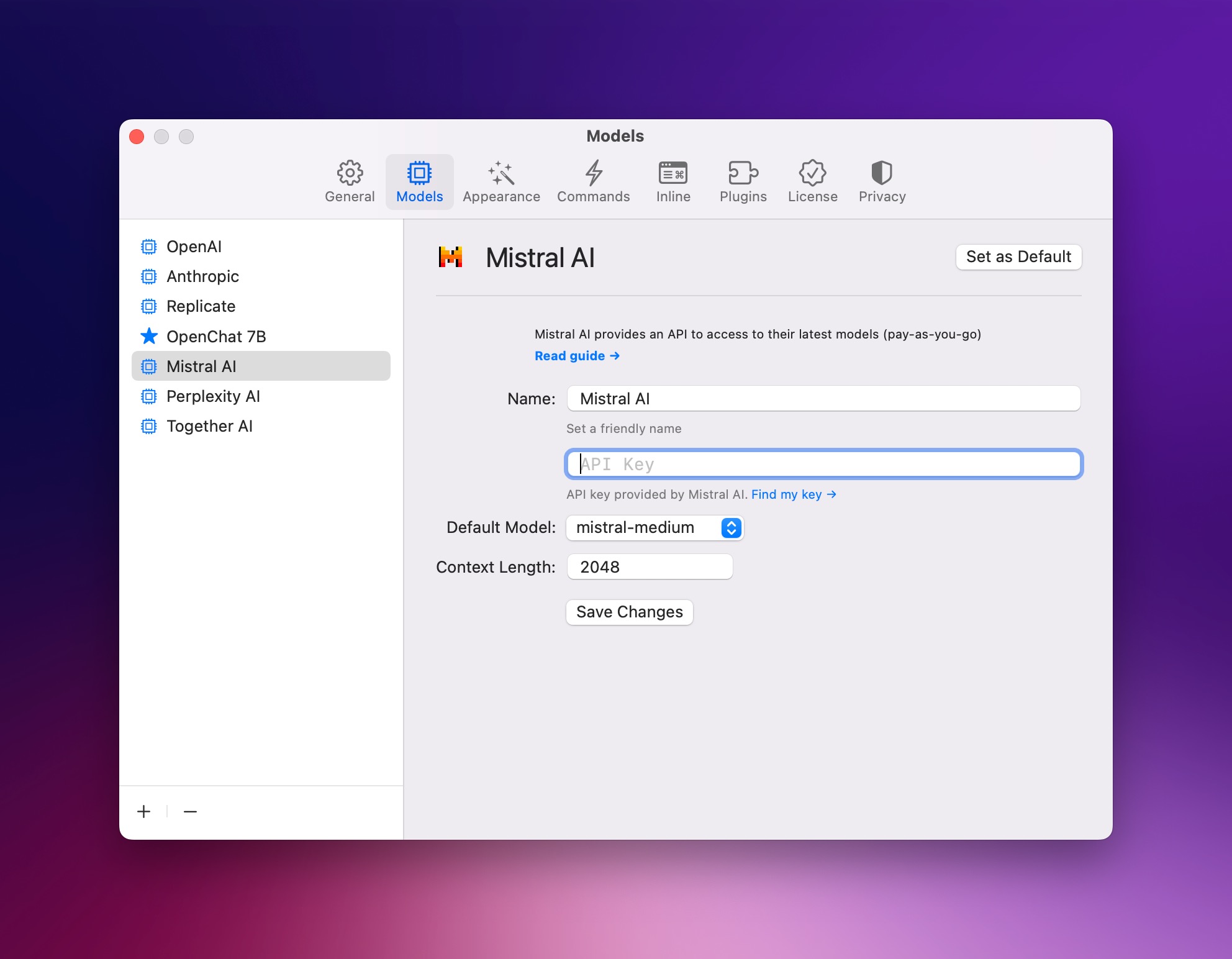

- New: Supports more AI service providers: Mistral AI, Perplexity and Together AI

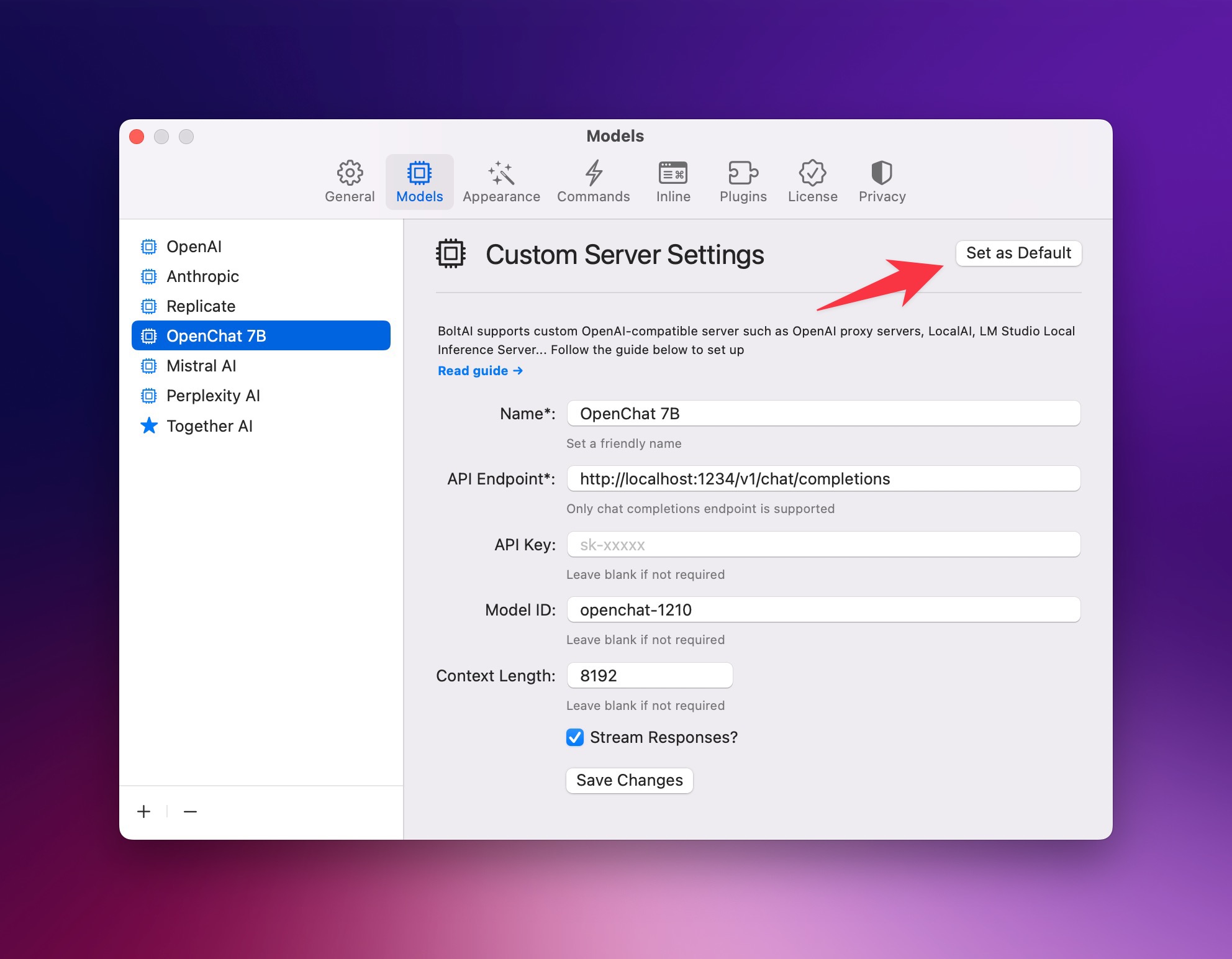

- New: Supports custom OpenAI-compatible servers: OpenAI proxy, LocalAI and LM Studio Inference Server...

- New: Use custom AI service in AI Command (beta)

- New: Incorporate ShotSolve into BoltAI: taking screenshots is now faster & easier

- Improvememnt: Pasting images directly into the chat input field

- Improvememnt: Show app icons for inline chats

- Fix: Fixed a race condition issue in the AI Command, where messages are not showing

- Fix: Fixed the bug where message clears when switching to another chat

More AI service providers

Following your feedback, I've added support for 3 new AI service providers: Mistral AI, Perplexity & Together AI.

Note that these services all require an API Key. Refer to the guide for each service on how to obtain one.

To set up, go to Settings > Models, click (+) button then fill the form. Pretty straightforward isn't it?

Use BoltAI with Local Inference Servers

In this version, I also improved the ability to use a custom OpenAI-compatible inference server. It's still in beta though, if you found an issue, please reach out. I would priotize this feature.

To start, follow this setup guide.

Here is a demo video of using AI Command with a local LLM (note that the wifi was off). Off-topic: if you're a founder, check out Jason Cohen's blog. His content is amazing.

IMPORTANT: To use this service with an AI Command, set it as default (screenshot below)

Don't forget to set the service as default for AI Command to work.

Don't forget to set the service as default for AI Command to work.

Thanks to SilverMarcs for the suggestion

ShotSolve: easily chat with screenshots

2 weeks ago, I started a new project called ShotSolve. It's a free app that allows you to take a screenshot and quickly ask GPT-4 Vision about it.

In this version, I've incorporated its features back into BoltAI. You can now take a screenshot quickly via the menubar, or using the keyboard shortcut Command + Shift + 1, then use GPT-4 Vision to ask about it.

It requires an OpenAI API key though. In the next versions, I will integrate it with an open-source model (LLaVA).

Here is the demo video (from ShotSolve)

Thanks to Travis for the suggestion

Other improvements

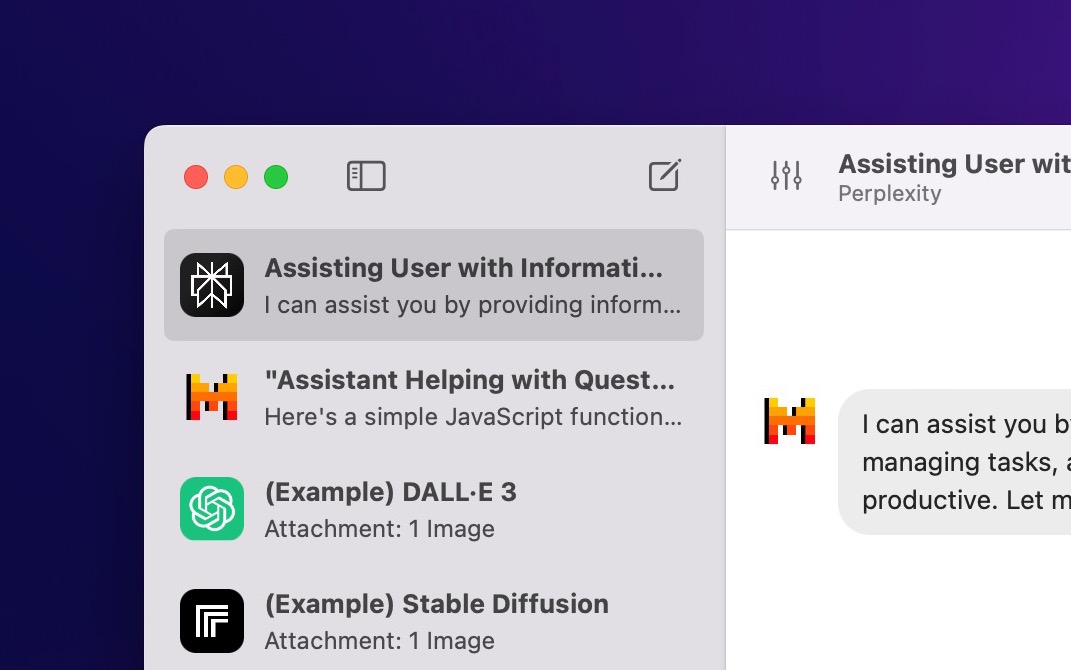

Show app icons for inline chats

One UX issue with the AI Command is sometimes the app with the highlighted text is not the active app. For example, your current active app is BoltAI but the text you highlighted is in Safari.

This results in the wrong content used as chat context (content of the BoltAI app).

I haven't found a solution for this yet. In the meantime, I decided to show app icon for inline chats as I think it's more useful.

Let me know what you think.

![]()

Other bug fixes & improvements

- Pasting images directly into the chat input field (Thanks to Vimcaw for the suggestion)

- Fixed the bug where message clears when switching to another chat (Thanks to Daniel for the bug report)

- Fixed a race condition issue in the AI Command, where messages are not showing

What next?

My bigger plan for 2024 is to focus on 2 big features:

1. Native support for local LLMs

I believe the open-source LLMs will getting better and better. BoltAI is a good candidate to be a privacy-first AI chat client.

2. Bring the functionality of GPTs to BoltAI.

It's not exactly the same as GPTs, but I believe AI Assistant is the future of BoltAI, not just a better UI or other convenient chat features.

We want to get our jobs done, not just a tool to get it done. And custom-built AI Assistant is the best way to do it, in my opinion.

Please help me build this vision. If you have any idea or suggestion, post it on our feedback board: https://boltai.canny.io

Thanks again for your continued support 🙏

Daniel