BoltAI v1.8.0 (build 24)

This release focuses on supporting more API Providers: Anthropic, Azure OpenAI, OpenRouter... Read on ↓

TL;DR

- Added support for Anthropic, Azure OpenAI & OpenRouter

- Better chat managements: edit, regenerate, delete & fork messages

- Universal full-text search

- Advanced model configuration

- Custom System Instructions

- Custom GPT parameters: context limits, temperature, presence penalty, frequency penalty

- Custom API Endpoint & Proxy

- Automatically switch to GPT3.5 16K if needed

Let's dive in.

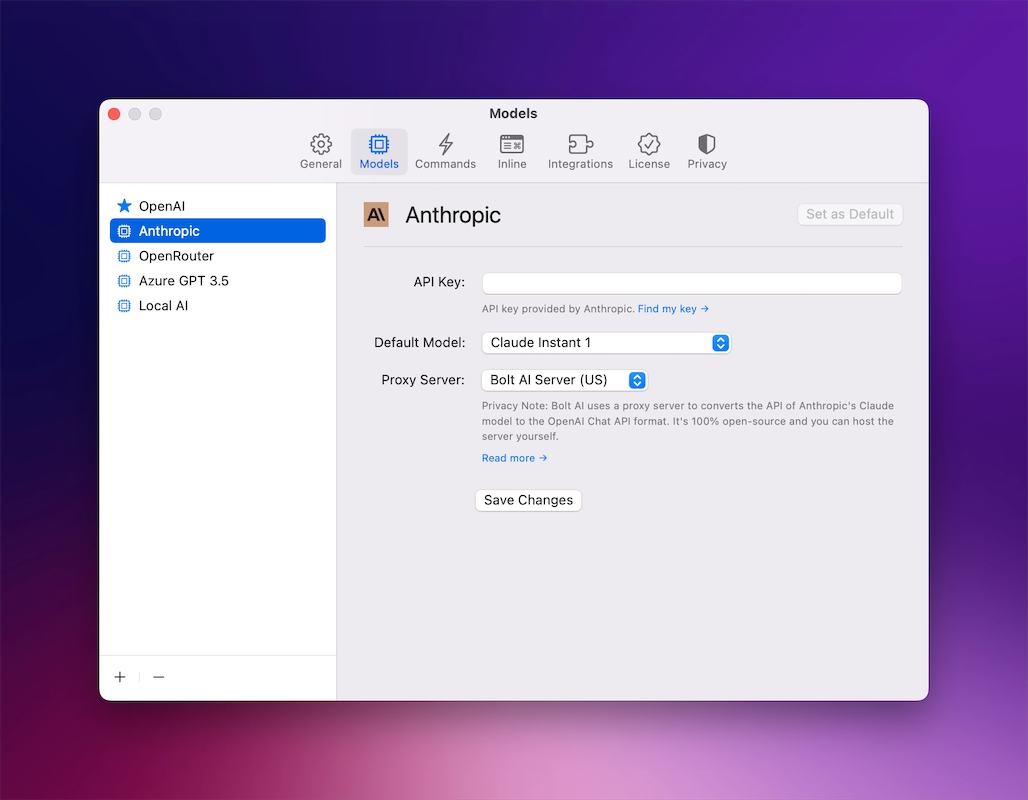

Support more AI models

This was the most requested feature for BoltAI. Good news, starting from v1.8, you can chat with different LLMs, including local open-source model via LocalAI.

Go to Settings › Models to get started. Below are step-by-step guides:

- How to set up BoltAI without an OpenAI API Key

- How to create an OpenRouter API key

- How to generate Azure OpenAI API key

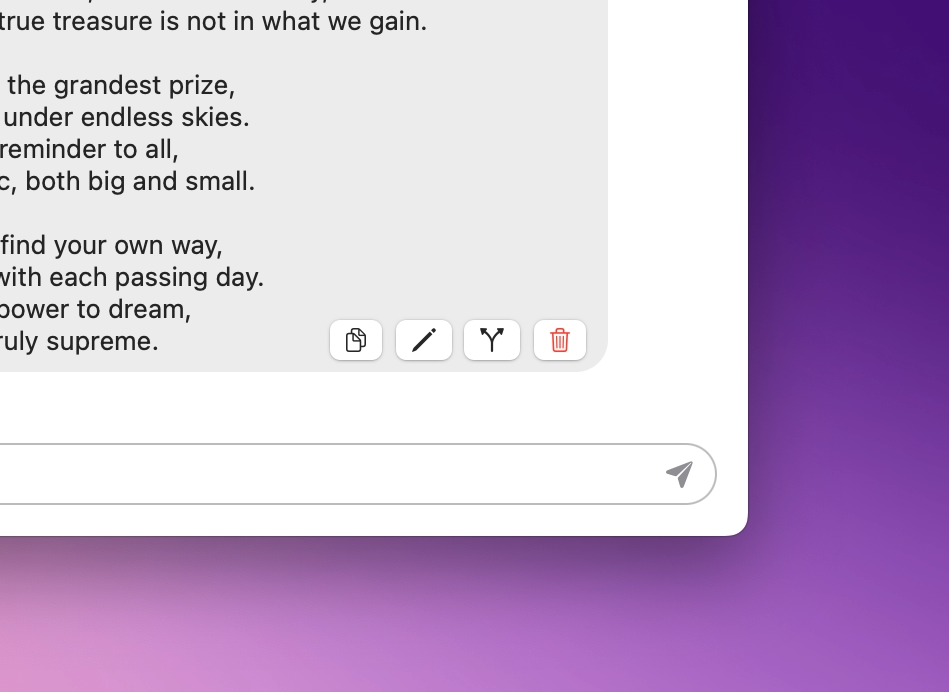

Better chat managements

The accuracy of the AI responses highly depends on the context of the conversation. In this release, I've added the ability to Edit / Regenerate / Fork & Delete messages

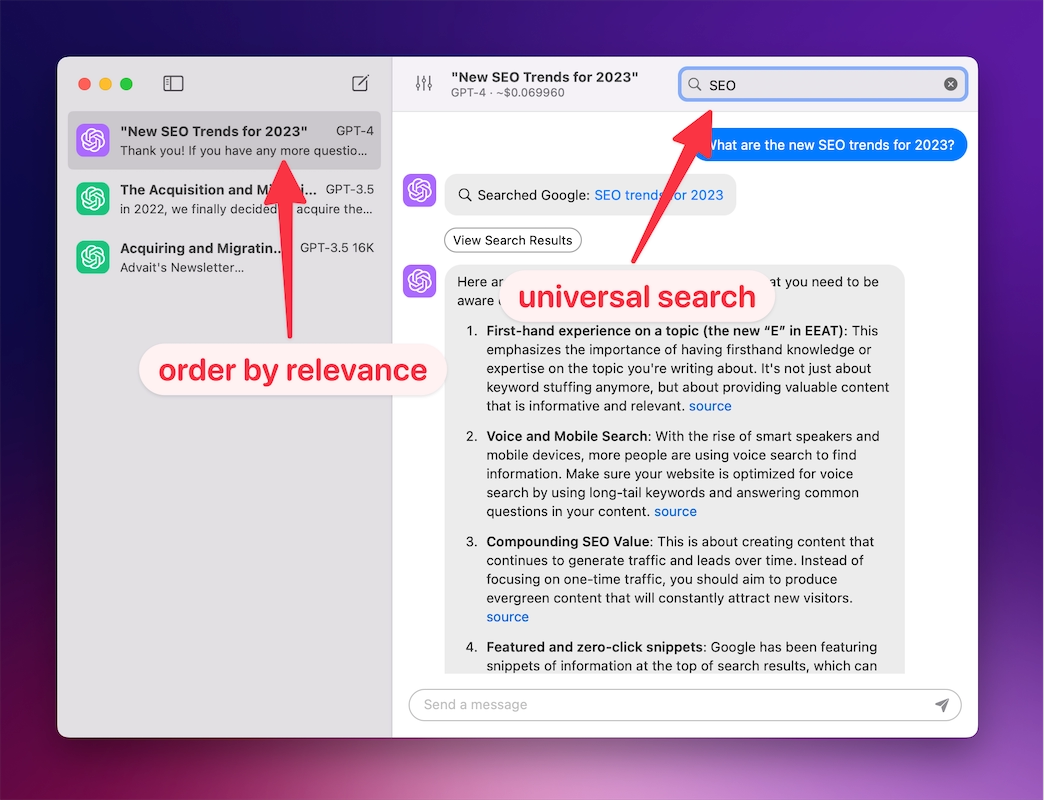

Universal full-text search

The search feature got a major upgrade! Before, searching was limited to current conversation. Now you can perform a full-text search across all conversations.

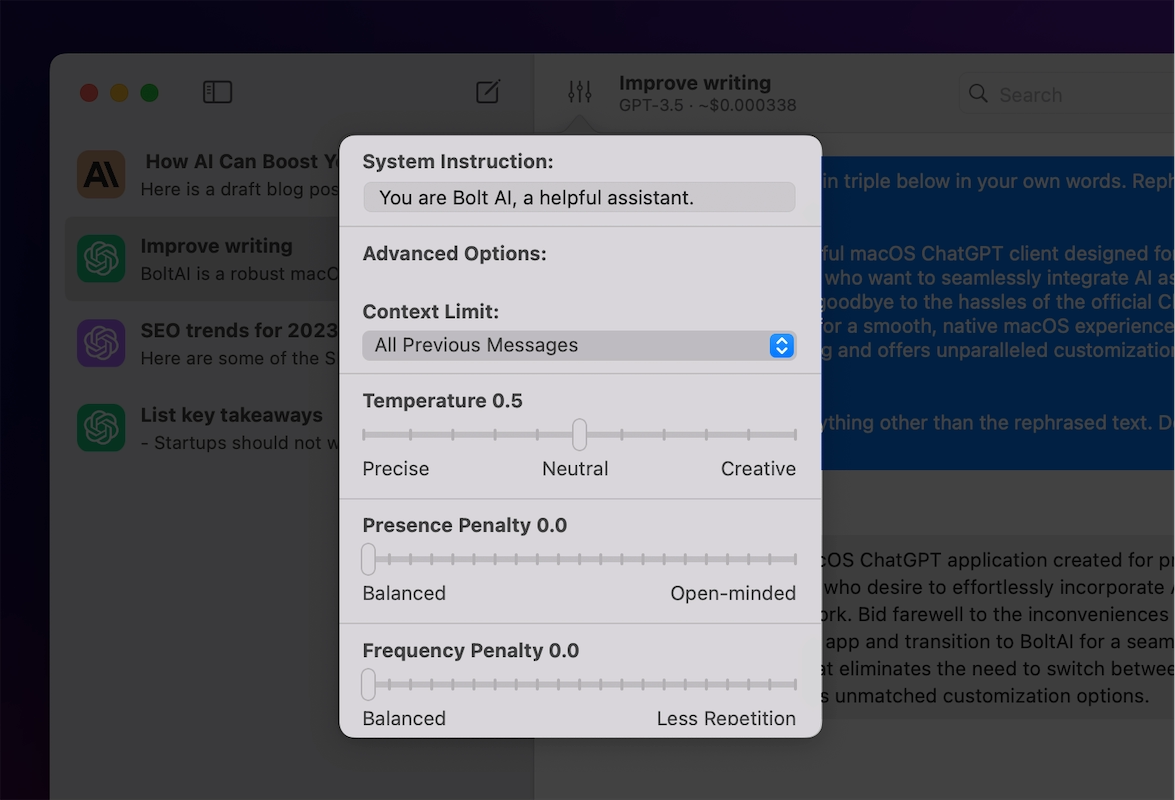

Advanced model configuration

Super users, this is for you. In this release you can tweak more configuration to further optimize your chat experience.

1. Custom system instructions & other GPT parameters

Open the Chat Configuration popover, scroll down to see all advanced parameters

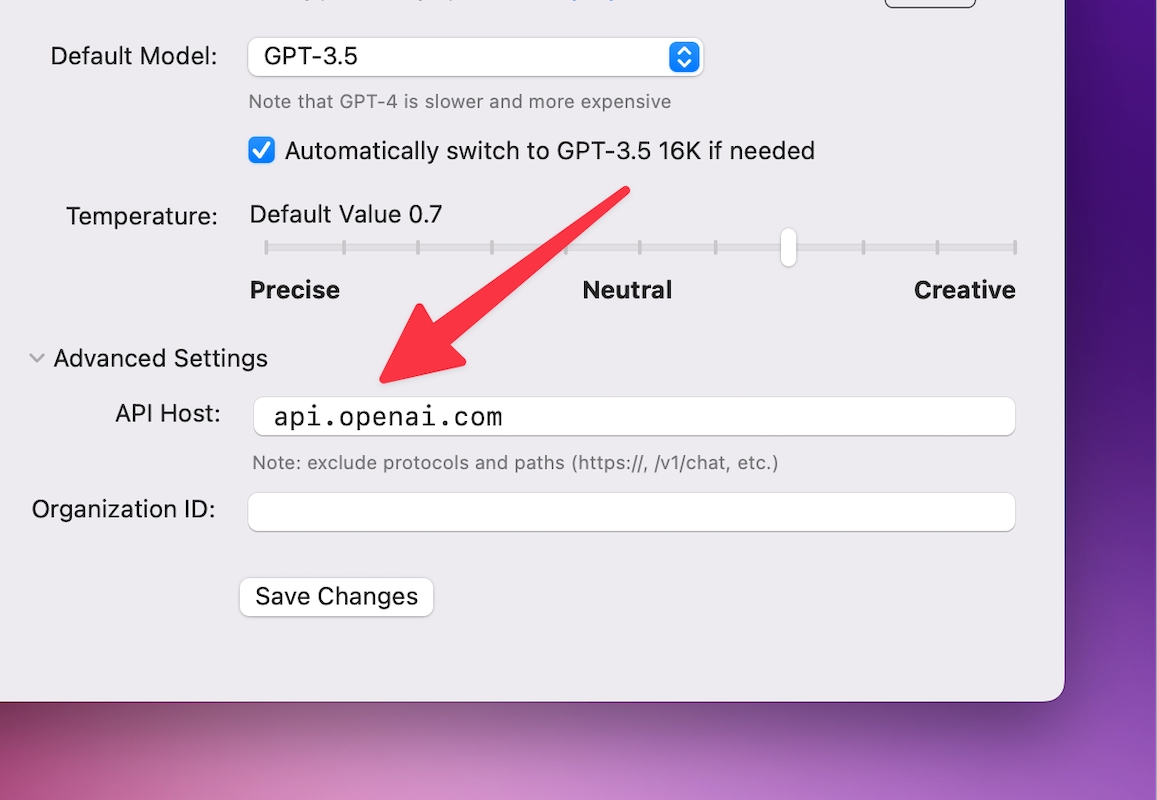

2. Custom API Endpoint & Proxy

Go to Settings › Models › OpenAI. Find the option under Advanced Settings

Minor improvements

1. Automatically switch to GPT3.5 16K if needed

This is useful when you want to summarize a long article. By default, BoltAI uses GPT-3.5 to save costs, and fallback to GPT3.5 16K for longer content.

2. Fixed the issue with Chat Title Generation

It should work correctly now. You can disable this feature in Settings › General

What's next?

BoltAI started with the "inline" feature where you can integrate ChatGPT deeply into any mac app. It's fun, unique and save a lot of time.

But, it's also incredibly challenging to get right.

-

From technical perspective: each app has its own UI & custom shortcut keys, which makes it hard to maintain compatibilities.

-

From user experience perspective: the lack of dedicated UI makes it difficult to keep track of the AI responses.

So I decided to take a step back and start from zero: improve the Main Chat UI where it's guaranteed to work.

Now the Chat UI is pretty good, I'm going to put my full attention to the Inline mode again.

But I need your help. The inline feature requires deeper integration for each app, and I cannot support all macOS apps yet. So help me priotize this.

Go to BoltAI Roadmap page and suggest the apps you wanted to support first.

Thank you 🙏

If you are new here, BoltAI is a native macOS app that allows you to access ChatGPT inside any app. Download now.